Abstract

The paper presents the Leap Motion Technology, the dedicated software development environments, as well as some results in using it for learning and training. The Leap Motion Controller is a Human Interface Device (HID) that is connected to a computer and enables the user to interact with the digital system using hand movements, allowing the interaction by simply making gestures above the device, without physical contact with the digital system. The captured information is sent to the software on the host computer and 3D information is reconstructed with sub-millimeter precision from the 2D images sent by the cameras. The development of dedicated applications benefits from APIs for JavaScript, Unity, C#, C++, Java, Python, Objective-C and Unreal engine. The Leap Motion can be thus easily used in Virtual Reality applications, in games or just as a touch-less interface for various software products, adding a new way to interact with the digital environment. The designed applications use the gestures to play games, create art, practice exercises and learn using the ‘hands on’ experience. The device is a great tool for experimentation and learning, allowing the students to have natural 3D interactions in domains like sculpture, painting, design, music, medicine, natural sciences, engineering, etc… With Leap Motion, students can have immersive experiences in Virtual Reality environments or control intuitively digital devices in the real world using a simple and affordable interface.

Keywords: Leap MotionHuman-Computer InterfaceVirtual Reality

Introduction

Human-computer Interface Evolution

Computers and digital devices play a major role in our lives. The communication with them, called

Human-Computer Interaction (HCI), is done through Human Computer Interfaces, represented by the

hardware and software that allows the user to interact with the computer. Their exploitation crosses the

last decades, from the troublesome punch-cards of the 50s, the command line consoles of the 60s and 70s,

of graphical user interfaces started at the end of 80s and continued up to the current day with windows

and icons (Dix et al., 2004). The control of this devices is dominated by keyboard/ mice couple, but in the

last years new contenders have emerged, like multi-touch screens and lately natural interfaces. The

natural user interface detects directly body movements and voice commands, eliminating intermediary

interfaces like keyboards, mice or touch screens (Tate, 2013). The ability to sense the interaction without

physical engagement was studied with interest in the last decade and is a continuously a growing trend

(Harper et al., 2008), using nowadays eye movements and envisaging the exploitation of brain waves in

brain-computer interfaces (Hwang et al., 2013).

Digital Devices in Learning

The use of digital devices in classroom is effective because on one hand it enlarges the quantity of

information accessible to the student and on the other hand it improves the emotional state and the

motivation of the student, able to work with his favorite tools. Digital devices enlarge and improve the

collaboration in the classroom, creating and advancing new learning environments, including

interdisciplinary ones (Pavaloiu, Petrescu & Dragomirescu, 2014) engage students in new and exciting

ways. Opportunities for collaboration and problem-solving are expanded beyond the classroom in an

online environment.

The increasing the use of digital devices makes essential the learning with and about digital

devices. It prepares students to participate in society and the future workforce. The learning takes place

more and more in the Augmented Reality (AR) and Virtual Reality (VR) domains (Baya, & Null, 2016).

While in in AR the digital information is added to the real world, in VR the whole experience is translated

into a digital virtual world. Natural user interfaces create new forms of interaction and transport the

operator and the controlled device in a virtual dimension superposed on the real space, improving the

observed experience and the naturalness of the interaction. It allows intricate operation of devices and

access to fields like sculpturing, playing virtual instruments, surgery and many others.

We are investigating for several years the performances of AR and VR devices in learning at the

Robotics and Virtual Reality Laboratory of the Faculty of Engineering in Foreign Languages from the

University POLITEHNICA of Bucharest (Pavaloiu, Dinu, & Codârnai, 2016), (Pavaloiu, Sandu,

Grigorescu, Ioaniţescu, & Dragoi, 2016), (Pavaloiu, Sandu, Grigorescu, & Dragoi, 2016). In the

following, I will present the Leap Motion Controller (LMC) and several experiments prepared with the

students either as semester projects or as graduation projects. I strongly believe that the natural interfaces

(like LMC) will play a central role both in the interaction with computers and in the learning for the next

years.

Leap Motion Device

.Description

The Leap Motion Controller is a natural user interface for digital devices that allows touch free

control, using hands gestures as input. It is a small and simple device as shown in Fig.

hands and fingers tracking. It has three infrared LEDs which emit light with wavelength of 850

nanometers (outside the visible spectrum) and two cameras that capture the reflected light in this

spectrum. The device is compact, small (a few centimetres) and light (32 grams), making it easy to be

used and transported everywhere. Because of its simplicity, it is affordable, with the price of 56 USD as

we write. The distance monitored by the LMC starts from a few centimeters up to almost one meter.

Functioning

The LMC uses a USB 2.0 connection to transfer data to the computer. The device has a sensing

space of near a quarter of a cubic meter with the form of an inverted pyramid with the maximum working

distance of 80 cm. The data takes the form of a sequence of pairs of grayscale stereo images in the near-

infrared light spectrum representing mainly the objects illuminated by the LMC IR LEDs, but also ghosts

produced by other sources of light in that spectrum. The Leap Motion has two different tracking modes -

standard (on table) or as a head-mounted device, useful when it is used together with VR devices like

Oculus Rift.

The main difference between the Leap Motion and Kinect, a popular natural interface device

produced by Microsoft initially for gaming, is that the Leap is able to track particular small objects (hands

and pen-like things) in a smaller volume, but with much higher accuracy, making high-precision drawing,

manipulating 3D objects and pinch-to-zoom gestures on maps possible. The Kinect is focused on

capturing general full objects, making it less sensitive to small movements, like moving the fingers or a

pen. Unlike Microsoft Kinect, Leap Motion does not provide access to raw data in the form of a cloud of

points. The captured data is processed by proprietary drivers supplied by vendor and accessible through a

dedicated API, making it just a HCI interface, not a global 3D scanner. The LMC data is presented as a

series of snapshots called frames. One frame consists of objects like Hands, Fingers, Pointables (objects

longer and thinner than fingers) and additional information, like frame timestamp, rotation, translation,

scaling and other data. The frames frequency can depend on browser (60 frames per second maximum) or

on device (where it and can reach 200 fps).

Even if the Leap Motion claimed that the device will be more accurate than a mouse, as reliable as

a keyboard and more sensitive than a touch screen (2012), there is still some distance to that

performances. The usage of the LMC as a standard input device for a PC in 2D pointing tasks is limited

compared to a normal mouse because of the large movement times, the high overall effort ratings and the

larger error rate. (Bachmann, Weichert, & Rinkenauer, 2015). Thereby, a deviation between a desired 3D

position and the average measured positions below 0.2 mm has been obtained for static setups and of 1.2

mm for dynamic setups, minimal in reducing the LMC outcomes since the involuntary hand tremor

amplitude varies between 0.4 mm ± 0.2 mm (Weichert, Bachmann, Rudak, & Fisseler, 2013).

Programming Leap Motion

One of the big features of the device is the availability of open-source software. The community of

developers started from the 12.000 that received test units in October 2012 in the Software Developer

Program to over 200.000 people now. The enthusiasts benefit of well documented APIs and libraries,

accessible on the dedicated portal at

released Orion, a major update to its core software, designed specifically for hand tracking in Virtual

Reality. The Leap Motion SDK contains a library in C++ and one in C that define the API to the LMC

tracking data. Wrapper classes for these libraries define language bindings for C# and Objective-C, while

bindings for Java and Python use SWIG, an open source tool. Excluding the leap.js client JavaScript

library, all the files needed to develop applications (libraries, header files, plugins, etc.) are included in

the Leap Motion SDK, downloadable from the portal. The supported languages are JavaScript, C#, C++,

Java, Python and Objective-C. There are general classes, like Controller, Frame, Hand, Finger, Pointable,

Vector, which are present in all the languages APIs. There is also a pack of assets dedicated to Unity and

an independent plugin for Unreal.

The users contribute with libraries for integration with different programming languages, like

Processing or with code to improve the performance of the existing features. For example, Nowicki,

Pilarczyk, Wasikowski and Zjawin (2014) use Support Vector Machines and Hidden Markov Models to

create a gesture recognition library for real-time processing.

Leap Motion in Learning

Description

Leap Motion devices allow users to work touchless with digital systems and to interact with 2D and

3D environments in a familiar way. They are welcome by the students not just because of the novelty of

the idea, but also because they are easy to be used and allow natural interaction with digital entities. They

can work in an instinctual way and dissect bodies in anatomy classes, can see molecules in 3D form and

can play music or create sculptures without physical instruments.

Applications

There are many applications that are using the LMC for learning purposes and their number is

continuously increasing. The students from the Faculty of Engineering in Foreign Languages of the

University POLITEHNICA of Bucharest are working with the device in various instances, preparing

themselves for the next generation of HCI devices. They are working with it in interdisciplinary project-

based learning (Pavaloiu, Petrescu & Dragomirescu 2014) or use it for VR based learning applications. In the

following we will demonstrate the usefulness of this concept presenting some of their applications.

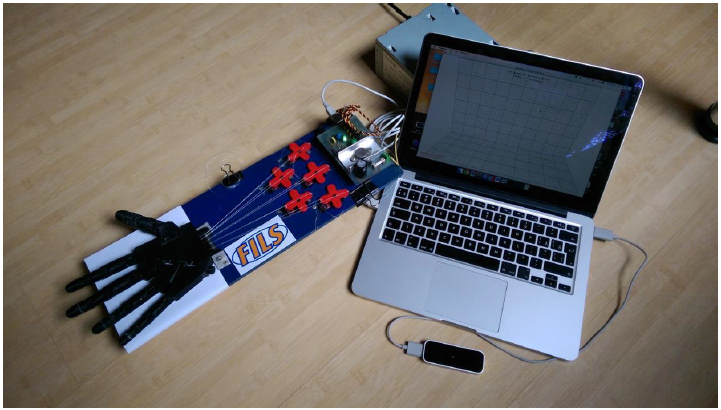

In Fig.

of an interdisciplinary robotics diploma project. The LMC reads the position of the fingers and transfer it

to the computer. The corresponding angles are encoded and sent to an Arduino device that controls the

positions of the fingers using 5 servos. The code is developed in JavaScript.

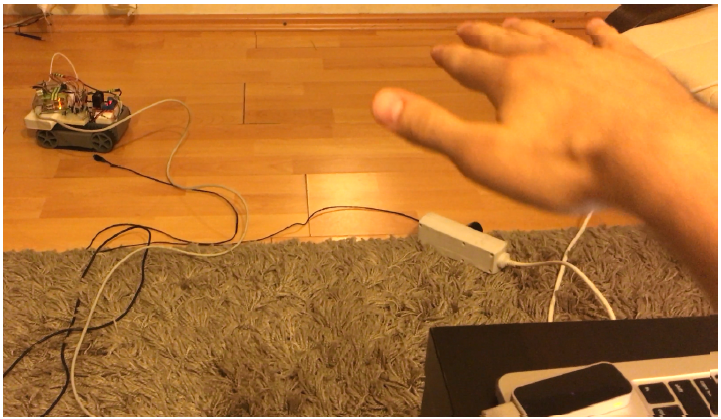

The PiRover robot, a rover type machine which is controlled using gestures, is shown in Fig.

The hand movements are read by the device, interpreted and the corresponding commands are sent to the

robot by a wired or wireless connection (BlueTooth or WiFi). The command segment of the robot, made

by a Raspberry Pi 2 board, receives the commands and controls the rover tracks correspondingly. The

position of the palm above the device imposes the direction – forward, backward, left or right or

combinations of those. A close fist is the signal for stop. The code is developed in Python.

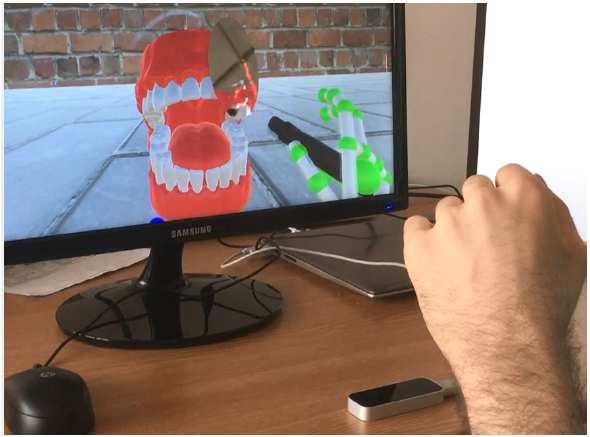

Some of our efforts are dedicated in create VR applications for e-learning and a favorite

development environment is Unity. The Leap Motion Development Portal integrates well the LMC in

Unity through a plugin and several assets (2015). Pavaloiu, Sandu, Grigorescu, Ioaniţescu and Dragoi

(2016) describes the use of VR for dentistry learning, while Pavaloiu,, Sandu, Grigorescu and Dragoi

(2016) show the advantages of inexpensive devices like LMC used for VR training. A major drawback of

non-haptic devices is the fact that they are not efficient in learning medical procedures that implies force

feedback, like drilling or cutting. There are still several operations that can be practiced and improved in

VR, like visualization, choosing the right treatment, making the resins, etc. In Fig.

application that challenges the student to discover caries using a mouth mirror. The code is developed in

Unity.

Future of Leap-Motion Type Devices

Natural user interfaces will dominate in the next years the HCI. The simplicity and reduced cost of

Leap-Motion make it a prominent candidate for the future mobile interfaces. It is relatively simple to

integrate the hardware of the LMC into a smartphone and to obtain a companion natural interface to the

surrounding digital devices. Because of the large computing power the smartphones are showing, they

will perform all the data processing and they will also adapt the recognition to the owner characteristics.

Conclusions

The device is a great tool for experimentation and learning, allowing the students to have natural

3D interactions in domains like sculpture, painting, design, music, medicine, natural sciences,

engineering, etc With Leap Motion, students can have immersive experiences in Virtual Reality

environments or control intuitively digital devices in the real world using a simple and affordable

interface. Learning how to use it is natural and learning how to program this type of devices is a good

exercise for a future career.

Acknowledgements

The robotic hand controller was developed by the students Viviana Dinu and Tudor Codarnai as

part of their diploma project, the touchless control of the PiRover designed by Nicholas Uzoma Muoh

was developed by Imad Abdul-Karim and the VR code for dentistry training comes from Adrian

Petroşanu. The ideas related to the VR dentistry training originate and are supported from the project PN-II-

PT-PCCA-2013-4-0644 “VIR-PRO - Virtual e-learning platform based on 3D applications for dental

prosthetics”, developed through the Romanian Joint Applied Research Projects PN II Program.

References

- Bachmann, D., Weichert, F., & Rinkenauer, G. (2015). Evaluation of the Leap Motion Controller as a

- New Contact-Free Pointing Device. Sensors 2015, 15, 214-233.

- Baya, V., & Null, C. (2016). How will people interact with augmented reality?. Technology Forecast:

- Augmented reality, Issue 3, 2016

- Dix, A., Finlay, J., Abowd, G. D. & Beale, R. (2004). Human–Computer Interaction (Third Edition).

- Pearson Education Limited

- Harper, R., Rodden, T., Rogers, Y.. & Sellen, A. (Editors). Being Human: Human-Computer Interaction

- in the year 2020, Microsoft Research Ltd

- Hwang, H. J., Kim, S., Choi, S.. & Im, C.H. (2013). EEG-Based Brain-Computer Interfaces: A Thorough

- Literature Survey. International Journal of Human-Computer Interaction, 29:12, 814-826

- Leap Motion. (2012). Leap Motion Unveils World's Most Accurate 3-D Motion Control Technology for Computing, Retrieved from https://www.leapmotion.com/news/leap-motion-unveils-world-smost-accurate-3-d-motion-control-technology-for-computing Nowicki, M., Pilarczyk, O., Wasikowski, J., & Zjawin, K. (2014). Gesture Recognition Library for Leap Motion Controller. (Bachelor’s thesis). Poznan University of Technology. Retrieved from http://www.cs.put.poznan.pl/wjaskowski/pub/theses/LeapGesture_BScThesis.pdf Pavaloiu, I. B., Petrescu, I., & Dragomirescu, C. (2014) Interdisciplinary Project-Based Laboratory Works. The 6th International Conference Edu World 2014 “Education Facing Contemporary World Issues”, 7th - 9th November 2014, Procedia - Social and Behavioral Sciences, Volume 180, doi: 10.1016/j.sbspro.2015.02.230. pp. 1145–1151 Pavaloiu, I. B., Sandu, S.A., Grigorescu, S., Ioaniţescu, R., & Dragoi, G. (2016) Virtual Reality for Education and Training in Dentistry. Proceedings of ELSE 2016 The 10th International Scientific Conference eLearning and Software for Education, Bucharest, April 21-22, 2016, doi: 10.12753/2066-026X-16-052 Pavaloiu, I. B., Sandu, S. A., Grigorescu, S. & Dragoi, G. (2016). Inexpensive Dentistry Training using Virtual Reality Tools, INTED2016, The 10th annual International Technology, Education and Development Conference, doi: 10.21125/inted.2016.1071, 279-285Pavaloiu, I. B., Dinu,V., & Codârnai, T. (2016) Inexpensive Design and Control for a Robotic Hand, ICERI2016, the 9th annual International Conference of Education, Research and Innovation, doi: 10.21125/iceri.2016.1391, 1742-1750 Tate, K. (2013). How the Human/Computer Interface Works (Infographics). Retrieved from http://www.livescience.com/37944-how-the-human-computer-interface-works-infographics.html Weichert, F., Bachmann, D., Rudak, B., & Fisseler D. (2013). Analysis of the Accuracy and Robustness of the Leap Motion Controller. Sensors 2013, 13, 6380-6393

Copyright information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

About this article

Publication Date

25 May 2017

Article Doi

eBook ISBN

978-1-80296-022-8

Publisher

Future Academy

Volume

23

Print ISBN (optional)

-

Edition Number

1st Edition

Pages

1-2032

Subjects

Educational strategies, educational policy, organization of education, management of education, teacher, teacher training

Cite this article as:

Păvăloiu, I. (2017). Leap Motion Technology In Learning. In E. Soare, & C. Langa (Eds.), Education Facing Contemporary World Issues, vol 23. European Proceedings of Social and Behavioural Sciences (pp. 1025-1031). Future Academy. https://doi.org/10.15405/epsbs.2017.05.02.126