Abstract

The rapid diffusion of information technology resulted from Covid-19 pandemic has affected contemporary businesses, and accordingly, it imposes challenges on the auditing profession. Consequently, the form of business evidence has changed from physical documents into databases. Hence, auditors no longer need to rely on samples to evaluate controls, instead auditors can analyze their data using software. Several authors have proposed measurement scale for IT competencies but failed to specifically focus on internal auditors’ IT competency. Hence, this paper developed the measurement scale of internal auditors’ IT competency in conducting the audit process. This measurement scale is crucial as IT competencies play an important role in determining the effectiveness of auditors. Five internal auditing practitioners have been interviewed to define the relevant Information Technology competencies for internal auditors in items development phase and 106 respondents were involved in scale development phase. The scale was validated by administering survey to 202 respondents. It can be confirmed that the scale proposed is uni-dimension and has adequate validity and reliability to measure internal auditors’ IT competency. By assessing the IT competency accurately, it can be used for internal auditors staffing purposes. Thus, it could enhance the efficiency and effectiveness of internal auditors in exercising their duties as the third line of defense.

Keywords: Comeptamcy Measurment, Covid 19 Pandemic, Internal Auditor, Inforamtion Technology

Introduction

Recently the use of Information Technology (IT) in the business world has been a necessity. It is hard to find a business that does not involve the use of IT at all. Consequently, internal auditors as the third line of defense should adapt and prepare themselves to face such an IT environment. The use of technology and the knowledge of how information technology works have become very essential to all levels of internal audit positions (Bailey, 2010; Henderson et al., 2013; Thottoli, 2021) and an essential part of the Internal Auditor’s Common Body of Knowledge (CBOK) (Bailey, 2010; Cangemi, 2015; IIA, 2020; Moeller, 2016).

The significance of internal auditors’ IT competency in their daily activities could be summarized into four reasons. First, it is required by the standards. The International Standard for the Professional Practices of Internal Auditing (ISPPIA) para 1220.A2 required that the internal auditors consider the utilization of technology in data analysis. Para 1210. A3 states that internal auditors are required to have sufficient competencies in technology-based audit techniques and knowledge about IT risk and control. Para 2110.A2 stipulates those internal auditors must assess whether the implemented IT governance can support the organization’s objectives.

Second, the IT system enables all business activities to be done paperless. The transactions’ maker, checker, and approver process were conducted in the system. It changes the evidence form from physical paper documents to digital database evidence (Oldhouser, 2016; Rakipi et al., 2021; Vona, 2017). Consequently, internal auditors must adapt, and they can no longer conduct conventional audits anymore, and the use of IT to support the audit process is a must (Héroux & Fortin, 2013; Madani, 2009; Rakipi et al., 2021; Wicaksono et al., 2018).

Third, the effect of audit evidence form changes has led to more sophisticated fraud. Fraudsters hide fraud scenarios in the company’s databases so that it is challenging to detect them manually without sophisticated tools (Vona, 2017). If that is the case, then the data mining and analytics audit software play a significant role in supporting an auditor with IT competency to uncover the fraud scenarios (Abiola, 2014; Barman et al., 2016; Grandstaff & Solsma, 2019; Mohammadi et al., 2020; Ngai et al., 2011; Yue et al., 2007; Yao et al., 2018).

The Fourth is the need for a fast and effective audit process and timely reports. IT competencies will help auditors a lot in operating several tools such as electronic work papers, an automated tool to monitor and track audit remediation and follow-up, an automated tool to manage the information collected by internal audit, an automated tool for data analytics, a software or a tool for data mining, flowchart or process mapping software, software or a tool for internal audit risk assessment, an automated tool for internal audit planning and scheduling, continuous/real-time auditing, internal quality assessments using an automated tool (Cangemi, 2015). These can reduce the workload and the time pressure on the auditor; in turn, it can reduce auditor dysfunctional behavior (Zakaria et al., 2013).

Unfortunately, internal auditors’ digital information technology fitness is still in the beginner stage (PricewaterhouseCoopers, 2019). The IT competency has not been able to assist internal auditors to carry out their duties effectively and efficiently. Moreover, the IT competency for internal auditors is not well defined, and still lack of agreement on the IT competencies that internal auditors need to possess. While, recently, there have been many studies that will study the impact of IT Competency internal auditors on various dependent variables, which need a measurement scale for self-assessing the perceived internal auditors’ IT competency. Related to internal auditors, there was a survey conducted by Cangemi (2015). It provided a list of internal auditing related software that internal auditors need to master in assisting them to carry out their jobs. However, it was not intended to become a measure scale. Thus, this study aims to develop a valid and reliable self-assessing internal auditors’ IT competency measurement scale to be used for future research.

Literature Review

Previous studies provided various definitions of IT competency for various subjects. Ku Bahador and Haider (2012) defines IT competency for an accountant as the set of IT skill and other supporting soft skills in maintaining an accounting information system. Genevieve Bassellier et al. (2001) defines IT competency for a business manager as the set of IT knowledge someone must possess to exhibit IT leadership in the area of business. Other researchers define IT competency for IT managers as a combination of three critical components: IT knowledge, IT operation, and IT objects (Tippins & Sohi, 2003), or as a competency to support effective IT management (Croteau & Raymond, 2004).

In general, IT competencies refer to the employee’s perceived degree of familiarity of using an operating system, office software, and hardware (Peng et al., 2015) or the knowledge, skills, and attributes of a person that allows him to reach IT effectiveness in fulfilling the daily duties (Ni & Chen, 2016). IT competency is also defined as the knowledge, skills, attitude, and abilities that a person must possess to be successful in handling his works and developing his professional career (Devece Carañana et al., 2016). Moreover, Carnaghan (2004) defines it as what would be demonstrated by activities such as using software for particular purposes to support daily activities.

IT competency refers to what would be demonstrated by internal auditors, such as the ability to use various software and technologies to perform their daily audit activities effectively (Carnaghan, 2004). This definition assumed that the internal auditors' IT knowledge and IT skill are reflected from their ability to operate and to behave after interpreting the result of the software and tools. It is in line with the competency definition of Hannon et al. (2000), which is the ability to utilize skill and knowledge in work activities that can be assessed through performance. In this study, IT competency is self-assessed by the internal auditors using a questionnaire survey about the perceived competencies (skills, knowledge, and behavior) in using software and technology to perform their audit assignments. Questionnaires in research have become a common practice and have been considered the most accurate method, especially if the research involves many respondents in measuring a latent variable (Sekaran & Bougie, 2016). It also creates convenience for target respondents in providing the required data in sufficient time and without pressure so that the collected responses are more realistic, reliable, and honest (Gosselin, 1997).

Currently, various instruments for measuring IT competency have been proposed by many researchers. Bahador et al. (2012) measured IT competency with four dimensions: technical skills, organization skills, conceptual skills, and people skills; however, the construct is more intended to measure general accountant IT competency in maintaining their accounting information system. For external auditor, IAESB (2007) proposed the IT competencies standards with four dimensions: competency in assessing overall IT control environment, competency in assessing planning of financial accounting system, competency in evaluating financial accounting system, and competency in using IT to communicate audit result. Tsai et al. (2017) proposed a Likert scale self-reported questionnaire survey to assess perceived auditors’ IT competency in Taiwan. They applied three dimensions of technology, conceptual and realization competency. The first dimension covered data protection, data security, data recovery, and data access, while the second dimension covered the internet and its security, new software/application review, virus, and computer defense. The last dimension covered the capability to use working software, auditing software, and database management software. However, the items covered in the three dimensions mainly were detailed parts of IT auditors’ expertise. This study aims to assess the perceived IT competency of internal auditors in supporting their audit assignments and is not intended to measure the IT competency of IT auditors.

Methodology

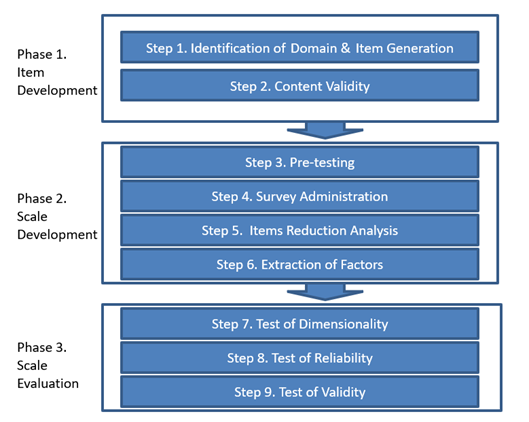

In developing the scale, this study follows the iterative steps, as shown in figure 1, suggested by Boateng et al. (2018), consisting of the item development, scale development phase, and scale evaluation phase.

The Items Development Phase

Items of the construct were taken from the survey of Cangemi (2015) and to confirm and gain insight on the most critical software in internal auditing practices, two round discussions with five experts of internal auditors practitioners were conducted. The experts are required to have more than 15 years of experience as internal auditors to be eligible. From the discussion, some insights were gained, such as significant differences between IT competency for internal auditors and IT competency for IT auditors. The IT auditor needs the competency to support them in reviewing the reliability, security, and accuracy of the IT implemented by entities; at the same time, IT competency for internal auditors is the mastery of tools and software to help internal auditors perform well in carrying out audit assignment. Other inputs from the panel are to eliminate the items' redundancies; for example, ITC04 and ITC09 are supposed to be redundant. Based on the ISACA’s definition of CAATs (Computer Aided Audit Tools), which is any use of technology to support audit, it includes the multipurpose audit software that can be used to select, match, recalculate, and report data. Thus, the data mining and data analytics software are also included in CAATs. The result of the items development phase is highlighted in table 1.

In the second-round discussion, the experts were required to assess the item statements validity by giving responses in the form of a four-point Likert scale: Very Irrelevant (1), Irrelevant (2), Relevant (3), and Very Relevant (4) in assessing tone at the top item statements. The result of the experts’ assessment is highlighted in table 1. in the form of statistical means. The means value below the possible median value (2.50) can be interpreted as irrelevant or very irrelevant; thus, the related item should be removed from the items list (if any). Since there is no median value below 2.5, no items are irrelevant to measure the IT competency of internal auditors.

The internal audit experts agreed on the fact that the items can assess the IT Competency. Likewise, the experts also agreed that the measurement was practical and applicable to internal auditors. The validity of the constructs is reflected by the Fleiss Kappa interrater value (Boateng et al., 2018). Fleiss kappa is used to measure the degree of agreement level between multi raters/more than two raters (Fleiss, 1971). Based on the calculation of the online Kappa calculator (http://justusrandolph.net/kappa/), for four categories (very irrelevant, irrelevant, relevant and very relevant), 9 item statements after the elimination of ITC07 and ITC 09 and the results of five expert raters was 0.53, which is classified as strength of agreement is categorized as moderate strength of agreement (Landis & Koch, 1977).

The Scale Development

The next step was a pre-test. The pre-test of the scale was performed by five academicians and ten internal auditing practitioners to get feedback to improve the scale, especially in terms of ease of understanding and other technical matters. It was suggested that the scale would be easier to understand if the same questions were combined instead of repeated in all items. The following stage was administering pilot testing; henceforth, 106 respondents were used for analysis. The demographic profile of respondents was highlighted in table 2.

Exploratory Factor Analysis (EFA) was conducted to reduce Items and extract dimensions. Firstly, Bartlett’s test of sphericity and the Kaiser-Meyer-Olkin Measure of Sampling Adequacy (KMO-MSA) test were conducted to assess whether the data has sufficient inter-correlation degree among the items for further processing with EFA. Bartlett’s test threshold should be significant at least 0.05 significance level, and the KMO-MSA should be higher than 0.500 (Hair et al., 2019). Both tests show an acceptable result (as highlighted in Table 3.) Bartlett’s test was significant at a p-value = 0.000, and the KMO-MSA was 0.893, which is higher than 0.500. Moreover, the Measure of Sampling Adequacy) for each item (as shown in table 4.) ranged from 0.883 to 0.923, which were also in the acceptable range as suggested by Hair et al. (2019). Thus, the results of the tests indicated that the data was appropriate for further data analysis with EFA.

Based on the extraction sum of squared loading, it was indicated that the scale was uni-dimension with 70.326% of total variance explained as shown in table 5. Hair Jr et al. (2019) suggested the total variance explained should be higher than 0.60. Thus, the scale can explain 70.326% of the variance, which is acceptable.

At the items’ level, the validity was assessed by the communalities and the matrix factor loading. Hair Jr et al. (2019) suggested that the communalities should be higher than 0.50, and any matrix factor loading below 0.55 should be eliminated. The values of the communalities ranged between 0.590 and 0.773 (as highlighted in table 6), which were at an acceptable level. At the same time, the values of the matrix factor loading ranged 0.768 and 0.879 (as highlighted in table 7), which were also at an acceptable level. Thus, the items on the scale were valid.

The Scale Evaluation

The scale evaluation aims to test the reliability and validity of the scale at different times and datasets. A new survey was administered, and 208 responses were collected, but only 202 were valid for further analysis using confirmatory factor analysis (CFA). The respondents’ demographic profile was depicted in table 8.

The table 9 depicts the descriptive statistics for each item of the IT competency scale. This scale contained nine items using the 6-point Likert scale (1=Strongly Disagree, 6=Very competent). The mean score ranged from 3.777 to 4.629, while the standard deviation of the score ranged between 0.936 and 1.213. All items recorded the left-tailed skewed data distribution. The kurtosis result indicates that the data distribution tends to be mesokurtic as it is still in the range of -1 to +1 (except for ITC01 and ITC04).

Among the nine items, ITC04 ‘automation of organizing the information collected during audit process’ produced the highest mean score (mean=4.629, std. dev.=0.936). It indicated that the internal auditors perceive themselves as having high competence in the automation of organizing the information collected during the audit process. Meanwhile, the ITC08 ‘executing audit assignment using continuous/real-time auditing software’ reported the lowest mean score value of 3.777, indicating that the internal auditors have relatively lower competence in using the software than those in other items. It can be understood since continuous/real-time auditing is a relatively new approach to doing internal auditing. Overall, the mean score value for the IT competency construct is 4.303.

Reliability is to what extent the results are consistent when the scale is repeated in the same identical circumstances at different times. The reliability can be assessed using Cronbach’s alpha (CA) and Composite Reliability (CR). Hair et al. (2019) suggested that the acceptable levels of CA and CR were in the range of 0.700 – 0.950 and 0.708 – 0.950, respectively. As highlighted in tables 10 and 11, the CA was 0.929, and the CR was 0.941, which are acceptable. The result of bootstrapping test of CA and CR also are significant at alpha 0.05. Therefore, the scale has no reliability issues.

The validity test aims to assess the degree to which a scale indeed measures the intended construct. It can be assessed using the Average Variance Explained (AVE) and the factor loading of each item. It suggested that the AVE should be higher than 0.500, which means that the minimum acceptable level of the items can explain at least 50% of the variance of the latent construct (Bagozzi & Yi, 1988). The factor loading should be higher than 0.708 (Hair et al., 2019). As depicted in Tables 12 and 13, the AVE and All factor loading are higher than the threshold level, and the bootstrapping tests’ results are significant at alpha 0.05. Thus, the validity is not an issue for the scale.

Uni-dimensionality can be assessed using CFA by ensuring the factor loadings are higher than the threshold and have the same direction (Awang, 2015). As depicted in table 13, all factor loadings were higher than 0.708 and same positive direction. Thus, the uni-dimensionality was confirmed.

Discussion

Currently, the IT competency measurements scale for internal auditors is urgently needed. Some IT competency measurement scales are proposed for accountants, external auditors, business managers, IT auditors, and IT managers, but they still lack of those for internal auditors. The global common body of knowledge of the Institute of Internal Auditors has defined the software and tools that are needed to be mastered to support their daily jobs, but not for a measurement scale yet. Currently, the scale consists of nine items and uni-dimension; however, this scale has to be periodically updated because the tools and software used to audit were continuously developing to become more sophisticated.

IAESB (2007) proposed the external auditors’ IT standard competencies with four dimensions: Assessing overall IT control environment, Planning of Audit Assignment, Doing Evaluation, and Communicating audit result. In this paper, the knowledge and the skill in IT governance, risk and control environment are covered in the competency in using risk and control assessment software and business process mapping. The results from the software or tools in risk assessment and process business mapping were used to prioritize the areas that must be audited first in the audit planning and scheduling software. In doing evaluation or audit field work, the auditors were equipped by data mining, data analytic and continuous/real time audit software. Finally, communicating the result, follow-up recommendation and audit quality assessment are used to reflect the fourth dimension. It is in line with the definition of Hannon et al. (2000) competency which is the knowledge, the skill and the proper behavior of the auditors as the reaction from the result of the software or tools.

Conclusion, Limitation and Recommendation for Future Studies

This study was done to fulfill the need for a valid self-assessing the perceived internal auditors’ IT competency measurement scale. The scale’s items are taken from Cangemi (2015) survey that defines the tools and software to be mastered by internal auditors to support their audit assignments. This study involved the experts' panel in initial validating the items. Then, the proposed items were purified by statistical exploratory factor analysis (EFA), and lastly, they were validated by the confirmatory factor analysis (CFA). It can be confirmed that the scale proposed, as shown in the appendix, is uni-dimension and has adequate validity and reliability to measure internal auditors’ IT competency.

In measuring the IT competency, this paper assumes that internal auditors’ IT skills and IT knowledge are reflected from the ability to utilize and behave based on the result provided by the software and tools in supporting their audit assignments. The scale did not directly measure the IT knowledge, IT skill, and the internal auditors' behavior in interpreting the result of audit tools and software, as defined by Hannon et al. (2000) it became the limitation of the scale. Another limitation is using a self-assessment scale that tends to inflate the competency (Kruger & Dunning, 1999).

These limitations provide opportunities for future studies. The studies which measure the fundamental IT competency -by assessing the IT knowledge, the IT skill, and the behavior of an internal auditor who is IT competence- need to be developed. Moreover, the non-self-assessment scale should be developed to anticipate the tendency of the inflated perceived competency.

References

Abiola, J. (2014). The Impact of Information and Communication Technology on Internal Auditors' Independence: A PEST Analysis of Nigeria. Journal of Scientific Research and Reports, 3(13), 1738-1752. DOI:

Awang, Z. (2015). SEM Made Simple: A Gentle Approach to Learning Structural Equation Modeling (1st ed.). MPWS Rich Publication.

Bagozzi, R. P., & Yi, Y. (1988). On the evaluation of structural equation models. Journal of the Academy of Marketing Science, 16(1), 74-94. DOI:

Bahador, K. M. K., Haider, A., & Mohd Saman, W. S. W. (2012). Information Technology and Accountants - What Skills and Competencies are Required? Proceedings of the European, Mediterranean and Middle Eastern Conference on Information Systems, EMCIS 2012, 2012, 770–781.

Bailey, J. A. (2010). The IIA’s Global Internal Audit Survey: A Component of the CBOK Study Core Competencies for Today’s Internal Auditor Report II. IIA Research Foundation.

Barman, S., Pal, U., Sarfaraj, M. A., Biswas, B., Mahata, A., & Mandal, P. (2016). A complete literature review on financial fraud detection applying data mining techniques. International Journal of Trust Management in Computing and Communications, 3(4), 336. DOI:

Bassellier, G., Reich, B. H., & Benbasat, I. (2001). Information Technology Competence of Business Managers: A Definition and Research Model. Journal of Management Information Systems, 17(4), 159-182. DOI:

Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., & Young, S. L. (2018). Best Practices for Developing and Validating Scales for Health, Social, and Behavioral Research: A Primer. Frontiers in Public Health, 6. DOI:

Cangemi, M. P. (2015). Staying a Step Ahead - Internal Audit’s Use of Technology. The Global Internal Audit Common Body of Knowledge (CBOK), (August), 16. DOI:

Carnaghan, C. (2004). Discussion of IT assurance competencies. International Journal of Accounting Information Systems, 5(2), 267–273. DOI:

Croteau, A.-M., & Raymond, L. (2004). Performance Outcomes of Strategic and IT Competencies Alignment1. Journal of Information Technology, 19(3), 178-190. DOI:

Devece Carañana, C., Peris-Ortiz, M., & Rueda-Armengot, C. (2016). What are the competences in information system required by managers? Curriculum development for management and public administration degrees. Technology, Innovation and Education, 2(1). DOI:

Fleiss, J. L. (1971). Measuring nominal scale agreement among many raters. Psychological Bulletin, 76(5), 378-382. DOI:

Gosselin, M. (1997). The Effect of Strategy and Organizational Structure on the Adoption and Implementation of Activity-Based Costing. Accounting, Organizations and Society, 22(2), 105–122. DOI:

Grandstaff, J. L., & Solsma, L. L. (2019). An Analysis of Information Systems Literature: Contributions to Fraud Research. Accounting and Finance Research, 8(4), 219. DOI:

Hair, J. F. Jr., Black, W. C., Babin, B. J., & Anderson, R. E. (2019). Multivariate Data Analysis (8th Ed.). Cengage. DOI:

Hanno, P. D., Patton, D., & Marlow, S. (2000). Transactional learning relationships: developing management competencies for effective small firm-stakeholder interactions. Education + Training, 42(4/5), 237-245. DOI:

Henderson, D. L., Davis, J. M., & Lapke, M. S. (2013). The Effect of Internal Auditors’ Information Technology Knowledge on Integrated Internal Audits. International Business Research, 6(4). DOI:

Héroux, S., & Fortin, A. (2013). The Internal Audit Function in Information Technology Governance: A Holistic Perspective. Journal of Information Systems, 27(1), 189–217. DOI:

IAESB. (2007). International Education Practice Statement 2 (IEPS 2): Information technology for professional accountants. International Federation of Accountant.

IIA. (2020). Internal Audit Competency Framework. Atlanta.

Kruger, J., & Dunning, D. (1999). Unskilled and Unaware of it: How Difficulties in Recognizing One’s Own Incompetence Lead to Inflated Self-Sssessments. Journal of Personality and Social Psychology, 77(6), 1121–1134. DOI:

Ku Bahador, K. M., & Haider, A. (2012). Information Technology Competencies for Malaysian Accountants – An Academic’s Perspective. 23rd Australasian Conference on Information Systems, 1–12.

Landis, J. R., & Koch, G. G. (1977). The Measurementof Observer Agreement of Categorical Data. Biometrics, 33(1), 159–174. DOI:

Madani, H. H. (2009). The role of internal auditors in ERP-based organizations. Journal of Accounting & Organizational Change, 5(4), 514–526. DOI:

Moeller, R. (2016). Brink’s Modern Internal Auditing Eighth Edition (8th ed.). John Wiley & Sons Inc.

Mohammadi, M., Yazdani, S., Hamed, K. M., & Maham, K. (2020). Financial Reporting Fraud Detection: An Analysis of Data Mining Algorithms. International Journal of Finance & Managerial Accounting, 4(16), 1–12.

Ngai, E. W. T., Hu, Y., Wong, Y. H., Chen, Y., & Sun, X. (2011). The application of data mining techniques in financial fraud detection: A classification framework and an academic review of literature. Decision Support Systems, 50(3), 559–569. DOI:

Ni, A. Y., & Chen, Y. C. (2016). A Conceptual Model of Information Technology Competence for Public Managers: Designing Relevant MPA Curricula for Effective Public Service. Journal of Public Affairs Education, 22(2), 193–212. DOI:

Oldhouser, M. C. (2016). The Effects of Emerging Technologies on Data in Auditing. University of South Carolina - Columbia. http://scholarcommons.sc.edu/senior_theses

Peng, J., Quan, J., Zhang, G., & Dubinsky, A. J. (2015). Knowledge Sharing, Social Relationships, and Contextual Performance: The Moderating Influence of Information Technology Competence. Journal of Organizational and End User Computing, 27(2), 58–73. DOI:

PricewaterhouseCoopers. (2019). Elevating internal audit’s role: The digitally fit function. PriceWaterHouseCoopers. https://www.pwc.com/us/en/services/risk-assurance/library/internal-audit-transformation-study.html

Rakipi, R., De Santis, F., & D’Onza, G. (2021). Correlates of the Internal Audit Function’s Use of Data Analytics in the Big Data Era: Global Evidence. Journal of International Accounting, Auditing and Taxation, 42, 100357. DOI:

Sekaran, U., & Bougie, R. (2016). Research Methods for Business: A Skill-Building Approach (7 Ed.). John Wiley & Sons Inc.

Thottoli, M. M. (2021). Impact of Information Communication Technology Competency Among Auditing Professionals. Accounting. Analysis. Auditing, 8(2), 38–47. DOI:

Tippins, M. J., & Sohi, R. S. (2003). IT competency and firm performance: Is organizational learning a missing link? Strategic Management Journal, 24(8), 745–761. DOI:

Tsai, W.-H., Chen, H.-C., Chang, J.-C., & Lee, H.-L. (2017). The Internal Audit Performance: The Effectiveness of ERM and IT Environments. In Proceeding of the 50th Hawaii International Conference on System Sciences. HICCS. http://hdl.handle.net/10125/41757

Vona, L. W. (2017). Fraud Data Analytics Methodology : The Fraud Scenario Approach to Uncovering Fraud in Core Business System. John Wiley & Sons Inc.

Wicaksono, A., Laurens, S., & Novianti, E. (2018). Impact Analysis of Computer Assisted Audit Techniques Utilization on Internal Auditor Performance. 2018 International Conference on Information Management and Technology (ICIMTech). DOI:

Yao, J., Zhang, J., & Wang, L. (2018). A financial statement fraud detection model based on hybrid data mining methods. 2018 International Conference on Artificial Intelligence and Big Data (ICAIBD). DOI:

Yue, D., Wu, X., Wang, Y., Li, Y., & Chu, C.-H. (2007). A Review of Data Mining-Based Financial Fraud Detection Research. 2007 International Conference on Wireless Communications, Networking and Mobile Computing. DOI:

Zakaria, N. B., Yahya, N., & Salleh, K. (2013). Dysfunctional Behavior among Auditors: The Application of Occupational Theory. Journal of Basic and Applied Science Research, 3(9), 495–503.

Copyright information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

About this article

Publication Date

15 November 2023

Article Doi

eBook ISBN

978-1-80296-130-0

Publisher

European Publisher

Volume

131

Print ISBN (optional)

-

Edition Number

1st Edition

Pages

1-1281

Subjects

Technology advancement, humanities, management, sustainability, business

Cite this article as:

Julian, L., Johari, R. J., Tobing, D. L., & Wondabio, L. S. (2023). The Internal Auditor’s Information Technology Competency Measurement Scale Development. In J. Said, D. Daud, N. Erum, N. B. Zakaria, S. Zolkaflil, & N. Yahya (Eds.), Building a Sustainable Future: Fostering Synergy Between Technology, Business and Humanity, vol 131. European Proceedings of Social and Behavioural Sciences (pp. 709-722). European Publisher. https://doi.org/10.15405/epsbs.2023.11.60