Abstract

In the modern world, the formation of the culture of a higher safety level, as well as risk thinking, is becoming an urgent necessity, as the living environment is becoming more complicated and communications in technical systems are becoming increasingly unsafe. This study aims to justify the use of pedagogical approaches to achieve this goal. The systematic approach is presented from the standpoint of the doctrine of complexly organized objects, systems, and the competence building approach characterizes the possibility of using the acquired fundamental knowledge, skills to work with complex technical systems. The authors substantiate their opinion that the integration of systemic and competence building approaches will determine the greatest efficiency in the pedagogical process of training specialists for work requiring developed risk-based thinking. In addition, at the moment, in the field of ensuring the safety of the population from various threats, the efforts of specialists are focused on the following areas: hazard identification, risk assessment, emergency forecasting; development of measures to reduce the risk and effectiveness of protecting the population and territories; state regulation in the field of risk reduction, as well as the improvement and development of forces and means of emergency response. For the implementation of all these areas, specialists with a formed risk-based thinking are needed.

Keywords: Pedagogical conditionssafety culturesystematic approachcompetence building approachprofessional competencerisk thinking

Introduction

In the XX and XXI centuries, the complexity and interconnectedness of technical and techno-social systems have increased enormously, making these systems much more vulnerable.

It is obvious that failures in systems can lead to human casualties, harm the environment and destabilize the economy ( Clearfield & Tilchik, 2018). All this suggests that there is a contradiction between the need to train highly qualified specialists in the field of technogenic risk management and the lack of prescribed standards for their training. In addition, at present, the issue of understanding risk-based thinking and the methodology of its formation is not fully developed.

Currently, in the Russian Federation in the field of ensuring human security, including the formation of a technosphere that is comfortable for human life and activity and minimizing the technogenic impact on the environment, specialists (bachelors, masters and graduate students) are being trained in the field of “Technosphere safety”. In particular, in addition to professional competencies, which establish the requirements for abilities in future professional activities (knowledge of methods, technologies, approaches, etc.), general cultural ones are also established. One of such competencies is the possession of a safety culture and risk-oriented thinking, while safety and environmental issues are considered as the most important priorities in life and work ( Order of the Ministry of Education and Science of Russia, 2016).

Problem Statement

The concept of a risk-based approach is introduced by the national standard of the Russian Federation “GOST R ISO 9001-2015 Quality Management Systems. Requirements". However, the standard states the need for such a property to achieve the effectiveness of a quality management system. In order to meet the requirements of this standard, organizations need to plan and implement actions related to risks and opportunities ( Ministry of Education and Science of Russia, 2015). If by thinking we mean the cognitive activity of a person (it is an indirect and generalized way of reflecting reality), then, in fact, the concept of risk-based thinking is reduced to understanding the processes that can provoke the realization of risks in a particular activity of the organization. In addition, at the moment, in the field of ensuring the safety of the population from various threats, the efforts of specialists are focused on the following areas: hazard identification, risk assessment, emergency forecasting; development of measures to reduce the risk and effectiveness of protecting the population and territories; state regulation in the field of risk reduction, as well as the improvement and development of forces and means of emergency response. For the implementation of all these areas, specialists with a formed risk-based thinking are needed ( Korolev, Arefyeva, & Rybakov, 2015; Index for risk-management, 2015).

Research Questions

Speaking about vocational education and the achievement of risk-based thinking, as the development of competence, we can recommend the integration of systemic and competence building approaches to mastering higher education programs in the area of “Technosphere Safety”.

Using a systematic approach, we act from the perspective of learning about complexly organized objects, systems representing the structure of elements, parts and performing certain functions, and using the competence building approach allows us to formulate key and professional competencies, i.e., the willingness of future security specialists to use the learned fundamental knowledge, skills and abilities for analysing the dangers inherent in complex technical and techno-social systems.

The considered standard in the direction of “Technosphere safety” ( Ministry of Education and Science of Russia, 2015) includes certain types of professional activity for which graduates are preparing (design and development; service and maintenance; organizational and managerial; expert, supervisory and inspection auditing; research). Taking into account that technosphere security is provided by people with higher technical education, i.e. with a type of thinking characterized as "technical", their professional training should be based on understanding the operation of technical systems, both elementary and the most complex. To do this, in our view, one of the most effective approaches will be the Perrow concept.

Purpose of the Study

According to the concept of Perrow ( as cited in Clearfield & Tilchik, 2018), there may be two factors that will have a significant impact on the failure of a complex technical system. A specialist with a developed risk-oriented thinking, at the level of professional reflection, is able to determine the most vulnerable system or part of the system.

The first factor determines the interaction of the elements of the system with each other. There are both simple systems, called "linear", and complex. Linear systems operate in a well-defined sequence, gradually performing the specified operations. An example is the operation of a pipeline, where the process is clearly algorithmized. Therefore, if a system malfunctions, the specialist responsible for the operation of the conveyor can immediately see at what stage the problem occurs.

However, with the development of the technosphere, there is a significant complication of technical systems. And dominant in the modern world are already becoming complex technical systems such as nuclear power plants, hydroelectric power stations, nuclear submarines, etc. On complexity of interaction of separate parts they can be compared to a web where all sites are connected among themselves and their interaction is carried out, both at direct, and at indirect influence on one of system elements which as it may seem to the ignorant person, even have to each other some relation.

Therefore, as mentioned above, a specialist working with a complex technical system, and even more so responsible for the safety of its functioning, must have a high degree of development of risk-based thinking based on deep knowledge of the functioning of this system and possible risks of system failure. Moreover, most of the work of complex technical systems goes unnoticed by the naked eye. The work of a specialist, in this case, can be compared with a hike along a path running along the edge of the abyss. In this case, the person includes all the senses responsible for physical safety: the visual system, the vestibular apparatus, the muscular system and everything else to prevent us from reaching the edge of the cliff!

However, trying to control a complex technical system, a person behaves like walking on the edge of an abyss, looking at this path through binoculars. That is looking through binoculars, we see large fragments of our journey; do not see all the way there. In this case, we will try to determine our actions fragmentary, focusing on the major element that is available to us in front of the eyes at the moment, trying to perceive it as part of the whole picture of the danger.

Obviously, when working with complex technical systems, a specialist will not be able to get deep into the system for every problem in order to clearly analyse what is happening with each of its nodes. And when many problems arise, specialists should focus on indirect indicators. Remembering the terrible accidents in Chernobyl and Fukushima, it is obvious that it is not possible to send someone to deal with the problems in the reactor itself, and the picture of the incident consists of the readings of the devices that are currently functioning and show the pressure, water flow, etc. All this cannot give a fully objective picture of the incident, which can also lead to errors in the analysis of the situation, especially since it is necessary to take into account the synergistic effect of failures of individual nodes. Hence the conclusion that Perrow makes: it is impossible to sufficiently understand complex technical systems to predict all the possible consequences of even a small malfunction.

Now let's move on to the second factor determining the “backlash” possibilities in its functioning. Here, the leading condition will be the stiffness of the bundle of elements: a rigid bundle is a small backlash and, therefore, the light impact of a failure in one part on another part. If there is a large gap between the parts, then the survival rate of the system increases, with problems in one element.

Research Methods

A specialist working in the field of security control of complex technical systems and possessing a developed risk-based thinking should not only know, but also feel the difference in the functioning of linear and complex systems. It is obvious that it is impossible in complex, tightly coupled systems to ensure flawless operation, because node replacements and alternative methods rarely work and often there are synergistic effects of system failures. An accident can happen very quickly, and it will be very difficult to simply turn off the system so that the problem does not get out of control.

Let us return to the accident at the Chernobyl nuclear power plant and Fukushima. Ensuring the safety of these most complicated and dangerous for humanity technical systems requires many specific conditions. Even a small malfunction in the production process, such as jamming of the compensating pressure valve, can lead to extremely undesirable consequences. And, as mentioned above, we will not be able to slow down or pause all the power of a nuclear power plant. The chain reaction proceeds quickly enough, and even if we stop it, a lot of residual heat will remain in the reactor. And here a lot depends on the professionalism and developed risk thinking of the people responsible for the security of the system. Professional reflexes should work, like a person walking along the edge of a mountain path, because in a critical situation, do not "look through binoculars", trying to consider the problem more broadly, but you must survive! Everything will be decided by the right choice of the moment.

Because if the problem grows rapidly as fuel rods melt and radiation leaks caused by overheating of the reactor, it is useless to increase the level of coolant after a long time, a decision on this must be taken immediately, no matter what. A properly conducted analysis of the situation will help to avoid a big disaster.

Another reaction can be expected from a hazard analysis at an aircraft factory, in which production is characterized by less rigid coherence. There, most parts are assembled separately, which allows you to control the occurrence of problems and eliminate them before assembly. The main thing here is to prevent the reject during the assembly of the aircraft, which can lead to an accident in the air. This requires a risk analysis of further consequences.

However, in the first and in the second case, the specialist is required to understand the functioning of the technical object as a system, as well as the possession of professional competencies in the direction of the technical object of activity.

Findings

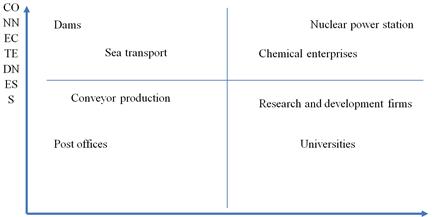

Obviously, no technical system can be categorized by Perrow. And that each case of analysis of system problems by a specialist will require an individual approach. However, in the formation of risk-based thinking, you can be guided by some general guidelines: it is necessary to understand how complex and rigid a bunch of elements and how much possible synergetic manifestation of problems in the existing system. Consider the diagram in Figure

The upper part of the matrix shows us rigidly connected complex technical systems, but it is obvious that dams, despite the complexity of their design, are still less complicated in technical solution than nuclear power plants. Their system has fewer components and, as a result, fewer areas for the risk of accidents.

If you look at the bottom of the matrix, then there are fairly primitive from a technical point of view, post offices and universities. Actions in them are not regulated by a very strict order and failure in their work will not be critical from the point of view of threat to life and health of others.

Naturally, the post office the most simple system even compared to universities, where life is governed by the complicated bureaucratic mechanism, where a lot of departments, offices, academic units and other. Universities perform various functions: scientific, educational, administrative, etc. All this is a complex, albeit not technical, but administrative system. Life at the university is multifaceted and it is regulated by both administrative acts and self-government bodies, which can lead to some conflict situations. For example, the conclusion of an administration contract with a teacher for a whole year can be challenged by the decision of the Academic Council, both of the faculty and the university itself, and there are many such examples. However, since the connections in the "University" system are not rigid, it is always possible to maneuver. At the same time, the work of the University is not disturbed, moreover, this problem may not even be known to other faculties.

The most dangerous zone in the Perrow matrix is the upper right square. The systems in it having complex and rigid connections can lead to large-scale disasters. Failures in these systems will propagate "cascade", i.e. quickly and uncontrollably, generating a "domino effect." Let us recall how the Sayano-Shushenskaya Dam instantly collapsed and what catastrophic consequences this accident had. Moreover, as the situation worsens, the external manifestations will be more ambiguous. With all due diligence, it will be difficult to accurately diagnose the problem, and it is also possible to aggravate it by solving not the primary task, which is such, but the one that seems to us the most important.

Fortunately, most accidents can be prevented. The immediate causes of their occurrence will not be the complexity or rigidity of the joints. As it is known, from numerous sources, reckless risk, ignoring warning signals, problems in communicating with people, low professional training of personnel and errors in management usually lead to accidents.

Due to the use of the Perrow concept, in the formation of risk-oriented thinking, it is possible to form an understanding of how to prevent accidents in complex technical systems, which are characterized by increased rigidity of connections and which cause the most damage.

Conclusion

Thus, we can conclude that risk thinking is thinking that is based on the analysis and understanding of decision-making processes in the functioning of complex technical and techno-social systems, based on risk-oriented activities. The criterion for the development of risk-based thinking is the ability to analyse the greatest number of possible options per unit time and the choice of the option that leads to the least adverse consequences ( Muraveva, 2012).

For the greatest pedagogical effectiveness in the process of formation of risk-based thinking, as professional competence, the integration of a system and competence building approaches seems optimal.

Acknowledgments

The work is performed according to the Russian Government Program of Competitive Growth of Kazan Federal University.

References

- Clearfield, K., & Tilchik, A. (2018). Invulnerability: Why systems fail and how to deal with it. Moscow: Azbuka-Atticus, CoLibri.

- Index for risk-management. (2015). Results 2015. INFORM. Retrieved from www/inform-index.org

- Korolev, V. Y., Arefyeva, E. V., & Rybakov, A. V. (2015). Prediction of the risks of industrial emergencies based on the assessment of the probability of damage resulting from emergencies, considered as a heterogeneous stream of extreme events. Scientific and Educational Problems of Civil Protection. Academy of Civil Protection EMERCOM of Russia, 2, 35-44.

- Ministry of Education and Science of Russia. (2015). GOST R ISO 9001-2015 Quality Management Systems. Requirements. Retrieved from http://docs.cntd.ru/document/1200124394

- Muraveva, E. V. (2012). Situational component technospheric risks. Journal of the Samara scientific center of Russian academy of Science, 1(3), 43-47.

- Order of the Ministry of Education and Science of Russia. (2016). No. 246 (amended on July 13, 2017) "On the approval of the federal state educational standard of higher education in the field of training 03.20.01 Technosphere safety (undergraduate level)" (Registered in the Ministry of Justice of Russia on 04.20. 2016 No. 41872). Retrieved from http://www.consultant.ru/document/cons_doc_LAW_197236/

Copyright information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

About this article

Publication Date

23 January 2020

Article Doi

eBook ISBN

978-1-80296-077-8

Publisher

European Publisher

Volume

78

Print ISBN (optional)

-

Edition Number

1st Edition

Pages

1-838

Subjects

Teacher, teacher training, teaching skills, teaching techniques

Cite this article as:

Alekseeva, E. I., Dobrotvorskaya*, S. G., & Muravyova, Е. V. (2020). Pedagogical Conditions For The Formation Of Risk Thinking. In R. Valeeva (Ed.), Teacher Education- IFTE 2019, vol 78. European Proceedings of Social and Behavioural Sciences (pp. 738-744). European Publisher. https://doi.org/10.15405/epsbs.2020.01.80