Abstract

Students evaluate course quality aspects both at the end of the semester and right after student performance evaluations the results of which often do not correspond. The research question attempts to show if there is a significant difference between end of the term course evaluations and evaluation results given right after the midterm tests and exams. The purpose of the study is to investigate how students perceive ways and methods of student performance evaluations and related lecturer-student interactions by analysing correlations between end of the term course evaluations and immediate evaluations. The impact of peer evaluations on end of the term course evaluations are also discussed. The research method was built on correlations between the overall course evaluations and the results of student evaluations given in the framework of the peer review program are determined and evaluated. Differences between average evaluations were also investigated. All data was obtained based on statistical analysis. The courses can be identified in case of which there are significant differences between evaluations given by the same group of students at the end of the semester and right after student performance evaluation sessions. We also highlight how student performance evaluations contribute to overall course evaluations. The results of the conducted statistical analyses pinpoint the need for restructuring the course evaluation questionnaire and the reconsideration of the role of peer evaluations concerning lecturer-student interactions .

Keywords: Course evaluationpeer reviewstudent satisfaction

Introduction

Teaching quality assurance has come to the front in the renewal of higher education (HE) systems worldwide as they are now competing for students both on national and international levels. The traditional model in which most students might have been viewed as passive recipients of teaching has been absolutely changing to a growing for active, independent learning (Mizikaci, 2006). In order to recruit and retain students they should aim to enhance student satisfaction. In response to these trends, ‘the practice of collecting student ratings of teaching has been widely adopted by universities all over the world as part of the quality assurance system’ (Kwan, 1999, p. 181). Therefore, to ensure the quality of HE courses, every HE institutions must have a working system to monitor and measure among others the teaching performance by putting the students and their experience forefront (Andersson et al., 2009).

Student evaluations of teaching effectiveness are commonly used to provide formative feedback to improve teaching, course content and structure; a summary measure for promotion and tenure decisions; information to students for the selection of courses and teachers (Marsh and Roche, 1993; Marsh, 2007; Wiers-Jenssen et al., 2002; Gruber et al., 2010a). Rowley (2003) identified four main reasons for collecting student feedbacks: to provide auditable evidence that students have had the opportunity to pass comment on their courses and that such information is used to bring about improvements; to encourage student reflection on their learning; to allow institutions to benchmark and to provide indicators that will contribute to the reputation of the university in the marketplace; and to provide students with an opportunity to express their level of satisfaction with their academic experience.

Lecturers are the pillars of excellence for the institution where their role has a high impact on the quality of teaching and learning (Ihsan et al., 2012). In order to assess the quality of teaching and learning from that aspect, there is a wide range of instruments in use to collect students’ feedbacks, both qualitative and quantitative (e.g. Brennan and Williams, 2004; Richardson, 2005; Brochado, 2009; Gruber et al., 2010a). If we wish to focus on course quality, formal measurements tend to be conducted through course evaluations completed by students mainly at the end of a term. It is considered as a feedback mechanism which pinpoints the strength of courses and identifies areas of improvement and should help to reduce the gap between what the lecturers perceive and what the students perceive as the quality of teaching (Venkatraman, 2007; Tóth and Jónás, 2014). Students have the opportunity to pass comment on their courses and that such information is used to encourage student reflection on their learning (Rowley, 2003; Grebennikov and Shah, 2013). Satisfied students are likely to attend another lecture delivered by the same lecturer or opt for another module or course taught by her/him (Banwet and Datta, 2003). They also found that students’ intentions to re-attend or recommend lectures depended on their perceptions of quality and the satisfaction they felt after attending previous lectures. Quality education means to students the quality of the lecturer including classroom delivery, feedback to students during the session and on assignments, and the relationship with students in the classroom, all these dimensions related to the assessment of teaching quality and classroom performance (Hill et al., 2003).

Problem Statement

In recent years, Hungarian HE institutions have had to face an increasingly competitive environment as well as an evolution of their customers’ needs (Elliott and Healy, 2001; Nguyen et al, 2004; Bedzsula and Kövesi, 2016a, 2016b). Quality-focused approaches and systems have become well-known in Hungarian HE institutions by now, and they have also been applied with more or less success. In the last couple of decades, and even now, their advances have been fuelled by some significant structural, operational and financial changes in the HE sector. This change is similar to those other institutions have to face in their immediate surroundings: HE has become a mass market service, characterized by a growing number of students and increasingly diverse institutions. As a result of these changes, the state basically no longer provides financial support to students studying in the field of business administration, a great majority of our students pay tuition fee. These changes have addressed many issues in quality regarding the processes of education. At the same time, the competition for students among the institutions has increased as well (Tóth et al., 2013).

In Hungary, the need to measure the quality of teaching at university level is related to a process of autonomy. Traditional teacher centrism is constantly being challenged, the position of students is gradually rising, the role and effect of students in the teaching process are undergoing profound changes. Students are no longer merely the passive recipients of knowledge. They are now recognised as the principal ‘stakeholder’ of any HEI and must be allowed a voice that is both listened to and acted upon in order to enhance the quality of the total learning experience. Student feedback of some sort is collected by most HE institutions, though, there is little standardisation in how sophisticatedly this feedback is collected and how results are utilized and fed back to the teaching processes. There is still little consensus on how to use and more importantly, how to act upon such data.

The Hungarian law on HE stipulates and regulates universities' student satisfaction surveys, as a result of which several different solutions can be perceived during the execution and application periods (Bedzsula and Kövesi, 2016a). Some HEIs have set up teaching evaluation systems for each discipline, and the teaching of every lecturer has been brought within the scope of these evaluations. Many HEIs have started up online teaching evaluation systems for students so as to make evaluation easier and to facilitate comprehensive assessment. There are HEIs that publish evaluation results in form of institutional documents. Some HEIs have set up comprehensive motivation systems or have linked teachers’ professional promotions, specialized trainings, teaching bonuses and stipends to student teaching evaluation and are issuing rewards and warnings to teachers on the basis of student evaluation results.

In order to create a balanced picture of the evaluation, the professional judgement of academic staff, supplementing the view of other stakeholders are critical. Besides student evaluations and departmental supervision or coaching, the professional judgement of the academic staff and teaching performance may include peer review and self-reflection of lecturers. Our Faculty launched a unique peer review program in the academic year 2015/2016. ‘Peer support review’ has been identified as an essential process for reviewing both our teaching processes and the applied tools and methods, ‘catching mistakes’ and so improve the quality of the teaching service. A culture of peer reviewing is an important ingredient and a critical factor in order to enhance a quality improvement culture. The primary aim is to bring about changes in teaching practice and introduce new teaching methods (Kálmán et al., 2016; Tóth et al., 2016). Our peer review process is not restricted solely to classroom observation. The communication and interactions with students, informing students about the course outline, the aims and objectives of the course and about the means of student assessments as well as consultations, are also integrated into the peer review process and into its criteria system. Besides traditional end of the term course evaluations, students are allowed to give immediate feedbacks related to student performance assessments in the peer review program as well. However, the results from the two evaluations processes in those aspects that are common in both surveys often do not correspond each other.

Research Questions

Since the assessment of teacher’s classroom performance is a fundamental part of the university’s work of monitoring undergraduate teaching quality, the primary aim of both kinds of feedbacks is to objectively evaluate the quality of teachers’ classroom performance and help lecturers acquire prompt information regarding student reactions to their classroom teaching so that the teachers might continuously sum up teaching experiences, pinpoint improvements in their teaching, and improve teaching quality.

At our University students’ feedback has been collected institutionally in several forms. Formal measurement of course quality has been conducted through course evaluations completed by students at the end of a term since 1999 called Student Evaluation of Education (SEE). It is found to be useful to identify the strengths of courses and areas of improvement in order to understand the factors that contribute to student satisfaction (Tóth et al., 2013; Tóth et al., 2016). SEE is based on a questionnaire survey which has been transformed and developed many times during the past two decades. The results of SEE are utilized for assessing the quality of teachers’ classroom teaching, and the departments combine these results with the results of the peer review sit-ins and departmental supervision for conducting a comprehensive evaluation of teachers’ classroom teaching quality (Bedzsula and Kövesi, 2016a). The institution’s electronic study system offers students the SEE questionnaire for each course at the end of each semester. The questionnaire is completely anonymous; students can evaluate all the courses they took provided that they got a final grade from a certain course. It is not obligatory to fill out the questionnaire, students may skip any question, or even the whole questionnaire. The survey aims at evaluating elements of education quality providing a complex approach to the semester-long educational work. The latest edition was released in Fall 2013, and it was created in harmony with the regulations of the National Law on Higher Education (BME, 2013).

The course evaluation questionnaire is made up of two parts: in the first one, different questions are listed, depending on the type of each course (lecture, seminar or lab), while in the second part, students have to answer general questions about a certain lecturer (Bedzsula and Kövesi, 2016a). For the case of courses, the questionnaire contains the following questions (BME, 2013): SSE1.

The first five questions are related to the course itself, while the second five are related to the lecturer. In case of SSE1, SSE3, SSE4, SSE7, SSE8 students rate on a 5-point Likert scale where 1 stands for ‘unsatisfactory’ and 5 for ‘excellent’, while for questions including SSE2, SEE6, SSE9 students provide ‘yes’ or ‘no’ answers. SSE5 and SSE10 are open questions.

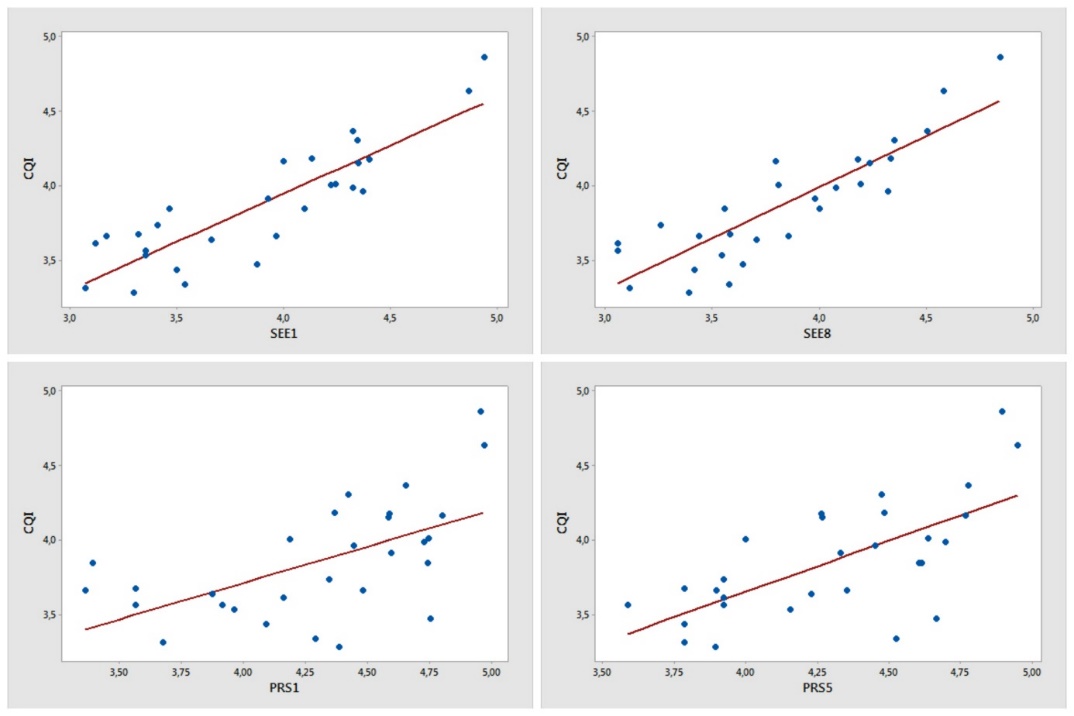

Beyond the average values of each question related to instructors and courses, new indices were determined with their combined assessment in the current SEE system. One of such new indices is the Course Quality Index (CQI), which is made up of the professor's lecture quality (SEE7) and the cumulative assessment related to the course (SEE4), and it is based on the following (in the case of only lecture and one instructor) (BME, 2013):

where AVSEE7 and AVSEE4 are the means of the answers given to questions SEE7 and SEE4. n7, n4 - the number of students who gave proper answers to question SEE7 and SEE4 (N=n7 + n4).

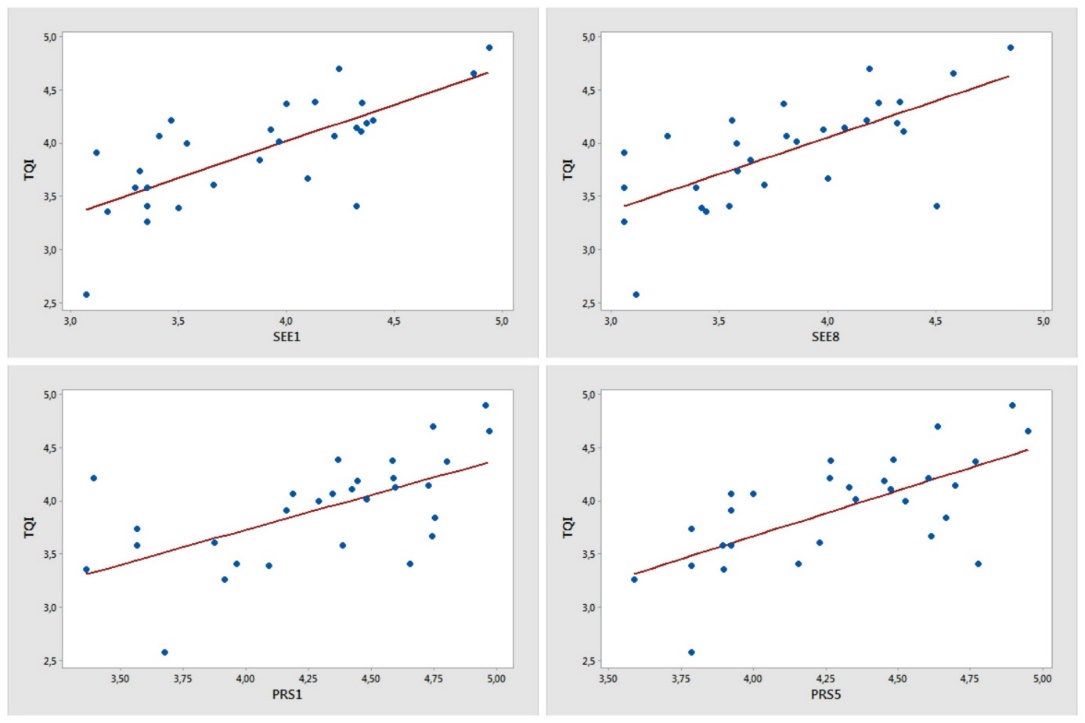

Teaching Quality Index (TQI) is a combined index as well showing the aggregated teaching evaluations of each lecturer (only in case of lectures):

where AVSEE7 is the average of the answers in case of SEE7; a: the number of lectures (courses); ni: the number of students giving a proper answer to the question in case of course i; N: the sum of students that gave proper answers to the questions of all the courses.

The TQI index plays a significant role in the reputation of lecturers. Therefore, it is considered a great achievement to appear on the top 100 list of the best professors, which is published in every semester (Bedzsula and Kövesi, 2016a).

Besides evaluating the quality of courses and lecturers at the end of the term, students give immediate feedbacks during the peer review process right after student performance evaluations by expressing their judgement on a 5-point Likert scale in 8 aspects (PRS1

Based on the course evaluation and peer review results of the last two academic years, the following research question naturally arose when analysing the corresponding assessment aspects in both kinds of evaluations: Is there a significant difference between end of the term course evaluations and evaluation results given right after the midterm tests and exams?

Purpose of the Study

The primary aim of this paper is to present and illustrate course evaluation practices through the example of the Budapest University of Technology and Economics in Hungary. Due to recent quality enhancement efforts, students evaluate course specific quality aspects both right after midterm tests / exams in case of courses taking part in the peer review program and at the end of the term in case of all running courses. Our research addresses the question whether there are significant differences between end of the term course evaluations and evaluation results given right after student assessment occasions.

This paper aims to investigate how students perceive ways and methods of student performance evaluations and related lecturer-student interactions by analysing correlations between end of the term course evaluations and immediate evaluations. The impact of peer evaluations on end of the term course evaluations are also discussed.

Research Methods

The main aim of the statistical analysis is to compare the students’ judgement on midterm tests or exams right after student performance evaluations and at the end of the semester and to examine the contribution of student performance measurements to the overall course and lecturer evaluations. The statistical analysis was conducted based on the following descriptive statistics (see Table

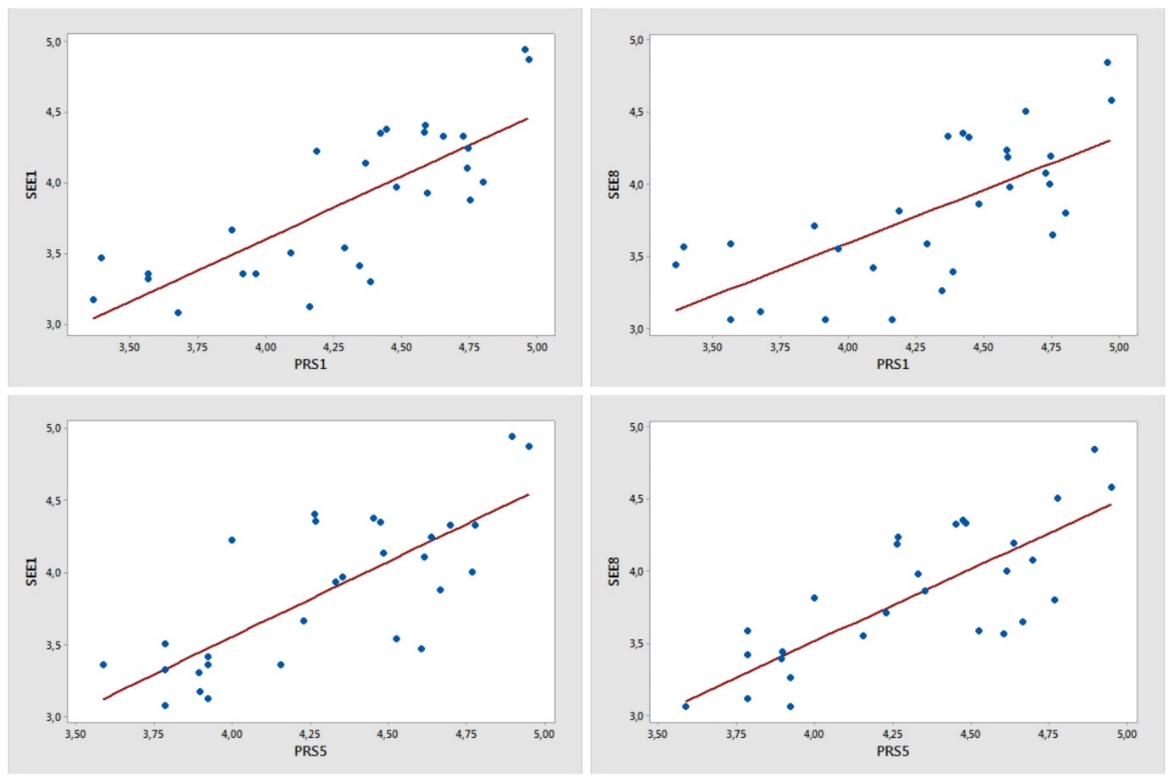

The questionnaires used to evaluate courses and lecturers contain two questions related to student performance evaluations, namely the question SEE1 refers to the supporting teaching materials provided by the lecturer, while question SEE8 covers the suitability of midterm tests /exams to evaluate students’ performance. Two of the questions of the peer reviewer survey which is filled out by students right after midterm tests / exams cover the same aspects. The question PRS1 indicates the availability and usefulness of teaching materials and in PRS5 students are asked to judge the consonance of the midterm test questions with the requirements. That is, SEE1 and PRS1 are concerned with the instruction materials while SEE8 and PRS5 are related to the adequacy of requirements and midterm test to evaluate students’ performance.

The conducted statistical analysis includes fitting linear regression models between the variables. Besides that, paired t-tests were applied to test the differences between the mean of the two kinds of evaluations in the aforementioned aspects. The correlations between the specific questions of the surveys were also analysed.

Findings

The following figure (Figure

The cumulative evaluation indices CQI and TQI refer to the judgement of the course as a whole and the reputation of the lecturer, respectively. The contributions of the variables to these aggregate evaluations are depicted in Figure

Based on illustrations of Figure

The modest correlation (R-sq=49.1) between the cumulative course evaluation and the lecturer’s reputation suggests that students take different factors into consideration when judging the course and its lecturer. On the one hand, besides the question SEE4 (Evaluate the course on the whole) the two questions related to the midterm tests, supporting materials and requirements are of the highest influence on final course evaluation. On the other hand, each evaluation dimension of the SEE shows more moderate correlations with the final reputation of the lecturer (TQI). One of the main purposes of the peer review program was exactly to broaden the evidence base which is used to assess quality, that is, to take data sources into account which are not sufficiently measured by the SEE survey. Tóth et al. (2016) have already examined the contribution of peer-review to overall course evaluation. They conducted that the peer review program covered several aspects that had impact on teaching quality, but was not sufficiently measured by SEE. In case of the peer review program, higher correlations have been found in case of the Teaching Quality Index. The reason for this is the fact that peer reviewing is more focused on the teaching skills and practices and not on judging the professional content. That is, TQI and peer reviewing try to assess the ‘soft dimensions’ of teaching quality, while SEE and CQI are more likely to reflect the knowledge gained through the course. In Tóth et al. (2016) student satisfaction surveys were only available for the first courses that were peer reviewed during the fall semester 2015 the lecturers of which could implement the necessary changes and suggestions given by peer reviewers during the fall semester 2016. The paired t-test conducted on the CQI and TQI indices of the academic year 2015/2016 and 2016/2017 in case of these courses corroborate the importance of the peer review program since these indices show a significant increase during this period (p-values are 0.039 and 0.040, respectively). Despite the weaker correlation between the instantaneous feedbacks given within the peer review program and the final course evaluations, this evidence further emphasizes the importance of peer reviewing to develop teaching practices.

Conclusion

Both the SEE and peer review survey have become a basic element of the faculty's quality culture in many aspects. Most of the lecturers and students monitor the results regularly. Both systems, however, have also received several criticisms, as we do not know students' habits and reasons for completing the surveys. As for the students, it is of key importance to ensure their interest in the evaluation system and to achieve and make sure their highest possible response rates. The more students complete the evaluation, the higher professors' trust gets towards how the systems works and the results it generates (Bedzsula and Kövesi, 2016a).

On the other hand, while the literature supports that students can provide valuable information on teaching effectiveness given that the evaluation is properly designed, there is a great consensus in the literature that students cannot judge all aspects of faculty performance (El Hassan, 2009; Martínez-Gómez et al., 2011; Cashin, 1983; Seldin, 1993; Spooren et al., 2013; Beleche et al., 2012). Student should not be asked to judge whether the materials used in the course are up to date or how well the instructor knows the subject matter of the course (Seldin, 1993). The students’ background and experience may not be sufficient to make an accurate assessment, thus their conclusions may be invalid. Another question that always comes up is connected to the level of honesty of students ‘answers and the willingness of them to take part in any kind of evaluation process (Ihsan et al., 2012).

Considerable research has investigated the reliability and validity of student ratings. Reliability studies (Wachtel, 1998; Marsh, 2007) generally address the question ‘are student ratings consistent both over time and from rater to rater?’. Our results confirm that students give significantly better evaluations right after midterm tests and exams compared to final course evaluations and therefore, student ratings are proven not to be consistent over time. Furthermore, the biasedness may also be handled by applying fuzzy Likert-type questionnaires in order to improve successfully the reliability of that kind of evaluations (see e.g. Jónás et al., 2017). At the same time the quality of courses is not homogeneous and varies from subject to subject, this problem may also be handled by utilizing the fuzzy methodology.

On the other hand, validity studies address the questions ‘Do student ratings measure teaching effectiveness?’ and ‘Are student ratings biased?’ (Shevlin et al., 2000; Spooren et al., 2013; Marsh, 2007). In our case, the biased characteristic of student related evaluations could be reduced by alternate evaluation methods as part of the peer review program.

Overall, the literature supports the view that properly designed student ratings can be a valuable source of information for evaluating certain aspects of faculty teaching performance. Taking the aforementioned issues into consideration, our Faculty must address these questions in order to be able to improve the quality of courses and lecturers and to feed back the result in a way that conclude in value-added increasing improvements (e.g., Gruber et al., 2010b; Looney, 2011; Denson et al., 2010).

Acknowledgments

The presentation of this paper at the 8th ICEEPSY Conference has been supported by Pallas Athéné Domus Animae Foundation;

References

- Andersson, P.H., Hussmann, P.M., Jensen, H.E., (2009), “Doing the right things right – Quality enhancement in Higher Education”, In SEFI 2009 Annual Conference.

- Banwet, D.K. and Datta, B. (2003), “A study of the effect of perceived lecture quality on post-lecture intentions”, Work Study, Vol. 52 No. 5, pp. 234-43.

- Bedzsula, B., Kövesi, J. (2016a), “Feedback of student course evaluation measurements to the budgeting process of a faculty. Case study of the Budapest University of Technology and Economics Faculty of Economic and Social Sciences” In: Su Mi Dahlgaard-Park, Jens J Dahlgaard (szerk.) 19th QMOD-ICQSS Conference International Conference on Quality and Service Sciences. Rome, Italy, 2016.09.21-2016.09.23. Lund: Lund University Library Press, 2016. pp. 216-228.

- Bedzsula, B., Kövesi, J. (2016b), “Quality improvement based on a process management approach, with a focus on university student satisfaction”, Acta Polytechnica Hungarica 13:(6) pp. 87-106.

- Beleche, T., Fairris, D. and Marks, M., (2012), “Do course evaluations truly reflect student learning? Evidence from an objectively graded post-test”, Economics of Education Review, 31(5), pp.709-719.

- BME, (2013), “Az oktatás hallgatói véleményezésének szabályzata” (Regulation of Student Evaluation of Education), Budapest University of Technology and Economics, H-Budapest.

- Brennan, J. and Williams, R., (2004), “Collecting and using student feedback. A guide to good practice.” Learning and Teaching support network (LTSN), The Network Centre, Innovation Close, York Science Park. York, YO10 5ZF, p.17.

- Brochado, A., (2009), “Comparing alternative instruments to measure service quality in higher education”, Quality Assurance in education, 17(2), pp.174-190.

- Cashin, W.E., (1983), “Concerns about using student ratings in community colleges”, New Directions for Community Colleges, 1983(41), pp.57-65.

- Denson, N., Loveday, T. and Dalton, H., (2010), “Student evaluation of courses: what predicts satisfaction?”, Higher Education Research & Development, 29(4), pp.339-356.

- El Hassan, K., (2009), “Investigating substantive and consequential validity of student ratings of instruction”, Higher Education Research & Development, 28(3), pp.319-333.

- Elliott, K.M. and Healy, M.A., (2001), “Key factors influencing student satisfaction related to recruitment and retention”, Journal of marketing for higher education, 10(4), pp.1-11.

- Grebennikov, L. and Shah, M., (2013), “Monitoring trends in student satisfaction”, Tertiary Education and Management, 19(4), pp.301-322.

- Gruber, T., Fuß, S., Voss, R. and Gläser-Zikuda, M., (2010a), “Examining student satisfaction with higher education services: Using a new measurement tool”, International Journal of Public Sector Management, 23(2), pp.105-123.

- Gruber, T., Reppel, A. and Voss, R., (2010b), “Understanding the characteristics of effective professors: the student's perspective”, Journal of Marketing for Higher Education, 20(2), pp.175-190.

- Hill, Y., Lomas, L. and MacGregor, J., (2003), “Students’ perceptions of quality in higher education”, Quality assurance in education, 11(1), pp.15-20.

- Ihsan, A.K.A.M., Taib, K.A., Talib, M.Z.M., Abdullah, S., Husain, H., Wahab, D.A., Idrus, R.M., Abdul, N.A., (2012), “Measurement of Course Evaluation for Lecturers of the Faculty of Engineering and Built Environment”, UKM Teaching and Learning Congress 2011, Procedia – Social and Behavioral Sciences 60, 358-364.

- Jónás, T., Árva G., Tóth. Zs. E. (2017), “Application of a Pliant Arithmetic - based Fuzzy Questionnaire to Evaluate Lecturers’ Performance”, In: Su Mi Dahlgaard-Park, Jens J. Dahlgaard (eds.): 20th QMOD-ICQSS Conference International Conference on Quality and Service Sciences, Koppenhagen (Elsinor), Denmark, 2017.08.05.-2017.08.07. Lund: Lund University Library Press, 2016.

- Kálmán, A., Tóth, Zs. E., Andor, Gy. (2016), “Improving and assessing the quality and effectiveness of teaching by innovative peer review approach: Recent efforts at the Budapest University of Technology and Economics for the modernisation and quality improvement of education”, In Tiina Niemi and Hannu-Matti Jarvinen (eds.). 44th Annual Conference of the European Society for Engineering Education (SEFI): Engineering Education on Top of the World: Industry University Cooperation, Tampere, Finland, 2016.09.12-2016.09.15. Brussels: European Society for Engineering Education (SEFI), 2016.

- Kwan, K. (1999), “How fair are student ratings in assessing the teaching performance of university teachers?”, Assessment and Evaluation in Higher Education ,24 (2), pp. 181–195.

- Looney, J., (2011), “Developing High‐Quality Teachers: teacher evaluation for improvement”, European Journal of Education, 46(4), pp.440-455.

- Marsh, H.W., (2007), “Students’ evaluations of university teaching: Dimensionality, reliability, validity, potential biases and usefulness”, In The scholarship of teaching and learning in higher education: An evidence-based perspective (pp. 319-383). Springer Netherlands.

- Marsh, H. & Roche, L., (1993), “The use of students’ evaluations and an individually structured intervention to enhance university teaching effectiveness”, American Educational Research Journal, 30, pp. 217–251.

- Martínez-Gómez, M., Sierra, J.M.C., Jabaloyes, J. and Zarzo, M., (2011), “A multivariate method for analyzing and improving the use of student evaluation of teaching questionnaires: a case study”, Quality & Quantity, 45(6), pp.1415-1427.

- Mizikaci, F., (2006), “A systems approach to program evaluation model for quality in higher education”, Quality Assurance in Education, 14(1), pp.37-53.

- Nguyen, N., Yshinari, Y. and Shigeji, M. (2004), “Value of higher education service: different view points and managerial implications”, Proceedings of Second World Conference on POM and 15th Annual POM Conference, Cancun, 30 April‐3 May.

- Richardson, J.T., (2005), “Instruments for obtaining student feedback: A review of the literature”, Assessment & evaluation in higher education, 30(4), pp.387-415.

- Rowley, J., (2003), “Designing student feedback questionnaires”, Quality assurance in education, 11(3), pp.142-149.

- Seldin, P., (1993), “The use and abuse of student ratings of professors”, The chronicle of higher Education, 39(46), p.A40.

- Shevlin, M., Banyard, P., Davies, M. and Griffiths, M., (2000), “The validity of student evaluation of teaching in higher education: love me, love my lectures?”, Assessment & Evaluation in Higher Education, 25(4), pp.397-405.

- Spooren, P., Brockx, B. and Mortelmans, D., (2013), “On the validity of student evaluation of teaching: The state of the art”, Review of Educational Research, 83(4), pp.598-642.

- Tóth Zs. E., Jónás T., Bérces R., Bedzsula B., (2013), “Course evaluation by importance-performance analysis and improving actions at the Budapest University of Technology and Economics”, International Journal of Quality and Service Sciences 5:(1) pp. 66-85.

- Tóth, Zs.E. and Jónás, T., (2014),” Enhancing Student Satisfaction Based on Course Evaluations at Budapest University of Technology and Economics”, Acta Polytechnica Hungarica, 11(6), pp.95-112.

- Tóth, Zs.E., Andor, Gy., Árva, G. (2016), “Peer review of teaching at Budapest University of Technology and Economics - Faculty of Economic and Social Sciences”, In: Su Mi Dahlgaard-Park, Jens J. Dahlgaard (eds.): 19th QMOD-ICQSS Conference International Conference on Quality and Service Sciences, Rome, Italy, 2016.09.21-2016.09.23. Lund: Lund University Library Press, 2016. pp. 1766-1779.

- Venkatraman, S., (2007), “A framework for implementing TQM in higher education programs”, Quality assurance in education, 15(1), pp.92-112.

- Wachtel, H.K., (1998), “Student evaluation of college teaching effectiveness: A brief review”, Assessment & Evaluation in Higher Education, 23(2), pp.191-212.

- Wiers-Jenssen, J., Stensaker, B.R. and Gr⊘ gaard, J.B., (2002), “Student satisfaction: Towards an empirical deconstruction of the concept”, Quality in higher education, 8(2), pp.183-195.

Copyright information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

About this article

Publication Date

16 October 2017

Article Doi

eBook ISBN

978-1-80296-030-3

Publisher

Future Academy

Volume

31

Print ISBN (optional)

-

Edition Number

1st Edition

Pages

1-1026

Subjects

Education, educational psychology, counselling psychology

Cite this article as:

Tóth, Z. E., Surman, V., & Árva, G. (2017). Challenges In Course Evaluations At Budapest University Of Technology And Economics. In Z. Bekirogullari, M. Y. Minas, & R. X. Thambusamy (Eds.), ICEEPSY 2017: Education and Educational Psychology, vol 31. European Proceedings of Social and Behavioural Sciences (pp. 629-641). Future Academy. https://doi.org/10.15405/epsbs.2017.10.60